LALNet (ICML2025)

Learning Adaptive Lighting via Channel-Aware Guidance

Abstract:

Learning lighting adaptation is a crucial step in achieving good visual perception and supporting downstream vision tasks. Current research often addresses individual light-related challenges, such as high dynamic range imaging and exposure correction, in isolation. However, we identify shared fundamental properties across these tasks: i) different color channels have different light properties, and ii) the channel differences reflected in the spatial and frequency domains are different. Leveraging these insights, we introduce the channel-aware Learning Adaptive Lighting Network (LALNet), a multi-task framework designed to handle multiple light-related tasks efficiently. Specifically, LALNet incorporates color-separated features that highlight the unique light properties of each color channel, integrated with traditional color-mixed features by Light Guided Attention (LGA). The LGA utilizes color-separated features to guide color-mixed features focusing on channel differences and ensuring visual consistency across all channels. Additionally, LALNet employs dual domain channel modulation for generating color-separated features and a mixed channel modulation and light state space module for producing color-mixed features. Extensive experiments on four representative light-related tasks demonstrate that LALNet significantly outperforms state-of-the-art methods on benchmark tests and requires fewer computational resources.

Learning lighting adaptation is a crucial step in achieving good visual perception and supporting downstream vision tasks. Current research often addresses individual light-related challenges, such as high dynamic range imaging and exposure correction, in isolation. However, we identify shared fundamental properties across these tasks: i) different color channels have different light properties, and ii) the channel differences reflected in the spatial and frequency domains are different. Leveraging these insights, we introduce the channel-aware Learning Adaptive Lighting Network (LALNet), a multi-task framework designed to handle multiple light-related tasks efficiently. Specifically, LALNet incorporates color-separated features that highlight the unique light properties of each color channel, integrated with traditional color-mixed features by Light Guided Attention (LGA). The LGA utilizes color-separated features to guide color-mixed features focusing on channel differences and ensuring visual consistency across all channels. Additionally, LALNet employs dual domain channel modulation for generating color-separated features and a mixed channel modulation and light state space module for producing color-mixed features. Extensive experiments on four representative light-related tasks demonstrate that LALNet significantly outperforms state-of-the-art methods on benchmark tests and requires fewer computational resources.

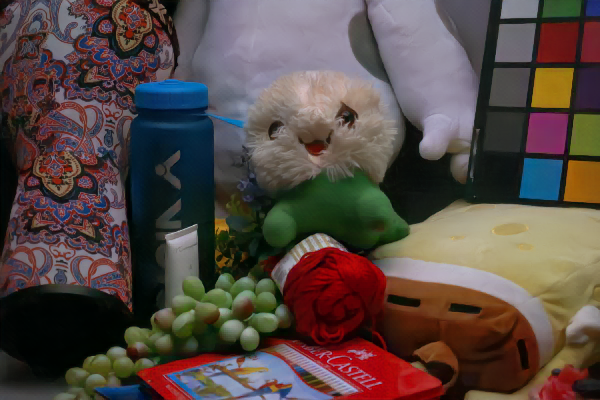

Comparison of LALNet with other methods on Image Retouching dataset (HDR+)

Restormer

Ours

RetinexMamba

Restormer

Ours

RetinexMamba

Restormer

Ours

RetinexMamba

Restormer

Ours

RetinexMamba

Comparison of LALNet with other methods on Exposure Correction dataset (SCIE)

Restormer

Ours

RetinexMamba

Restormer

Ours

RetinexMamba

Restormer

Ours

RetinexMamba

Restormer

Ours

RetinexMamba

Comparison of LALNet with other methods on Low-Light Enhancement dataset (LOL)

Restormer

Ours

RetinexMamba

Restormer

Ours

RetinexMamba

Restormer

Ours

RetinexMamba

Restormer

Ours

RetinexMamba

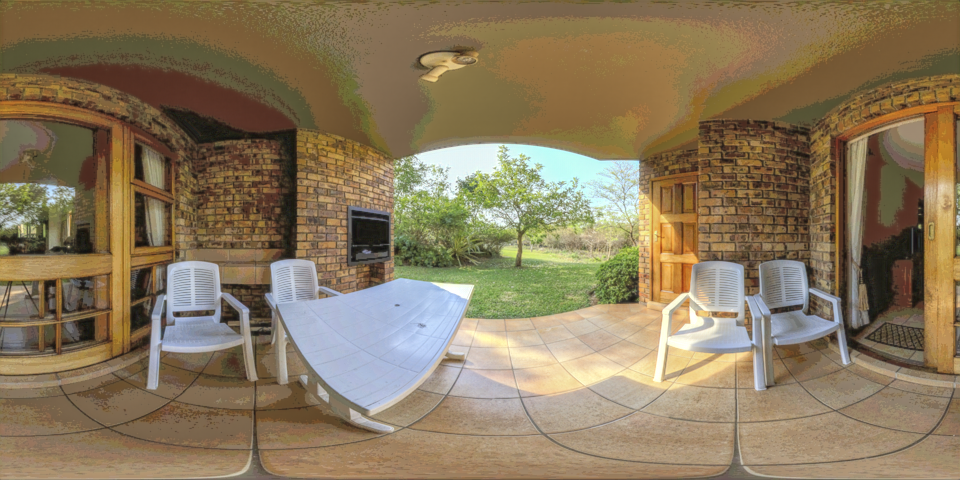

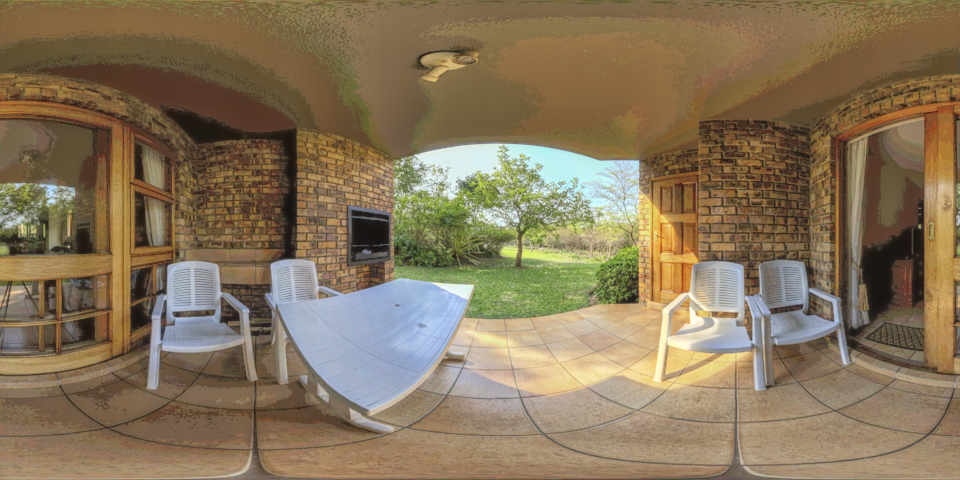

Comparison of LALNet with other methods on Tone Mapping dataset (HDRI Haven)

Restormer

Ours

RetinexMamba

Restormer

Ours

RetinexMamba

Restormer

Ours

RetinexMamba

Comparison of LALNet with other methods on HDR Survey dataset (Generalization Validation)

CLUT

Ours

LPTN

CLUT

Ours

LPTN

LALNet more visual display on HDR Survey dataset (Generalization Validation)

HDR INPUT

LALNet Result

HDR INPUT

LALNet Result

HDR INPUT

LALNet Result

HDR INPUT

LALNet Result